The Fallacy of Measuring Developer Productivity: McKinsey’s Misguided Metrics

At least the execrable, and totally misinformed, recent McKinsey article “Yes, you can measure software developer productivity” has us all talking about “developer productivity”. Not that that’s a useful topic for discussion, btw – see “The Systemic Nature of Productivity”, below. Even talking about “development productivity” i.e., of the whole development department would have systems thinkers like Goldratt spinning in his grave.

The Systemic Nature of Productivity

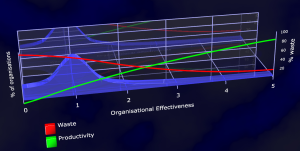

Productivity doesn’t exist in a vacuum; it’s a manifestation of the system in which work occurs. This perspective aligns with W. Edwards Deming’s principle that 95% of the performance of an organisation is attributable to the system, and only 5% to the individual. McKinsey’s article, advocating for specific metrics to measure software developer productivity, overlooks this critical context, invalidating its recommendations from the outset.

Why McKinsey’s Metrics Miss the Mark

Quantitative Tunnel Vision

McKinsey’s emphasis on metrics ignores the complex web of factors that actually contribute to productivity. This narrow focus can lead to counterproductive behaviours.

The Dangers of Misalignment

Metrics should align with what truly matters in software development. By prioritising the wrong metrics, McKinsey’s approach risks incentivising behaviours that don’t necessarily add value to the project or align with organisational goals.

Predicated on Fallacies

McKinsey’s suggestions are riddled with fallacious assumptions, including:

- Benchmarking – long discredited.

- Contribution Analysis – focused on individuals. Music to the ears of traditional management but oh so wrong-headed.

- Talent – See, for example, Demings 95/5 for the whole fallacious belief in “talent” as a concept.

- Measuring productivity (measure it, and productivity will go down).

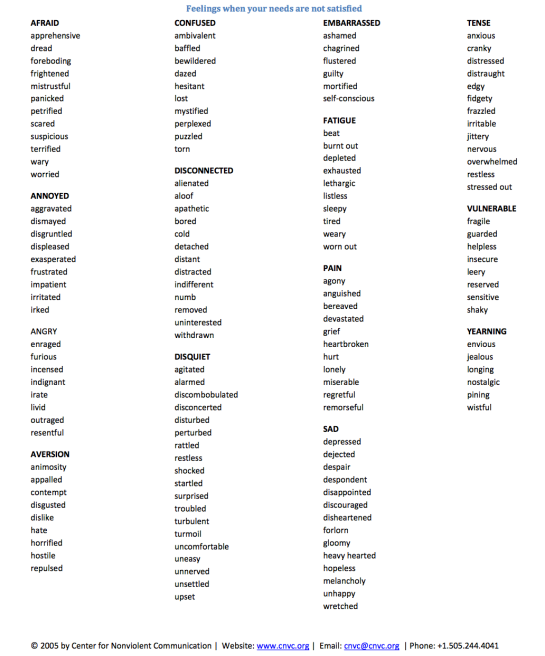

The Real Measure: Needs Attended To and Needs Met

The Essence of Software Development

The core purpose of business – and thus of software development – is to meet stakeholders’ needs. Therefore, have the most relevant metrics centre on these factors: How many stakeholders’ needs have been identified? How many have been and are being attended-to? How many have been successfully met? These metrics encapsulate the real value generated by a development team – as an integrated part of the business as a whole. (See also: The Needsscape).

Beyond the Code

Evaluating how well needs are attended to and met requires a focused approach. It includes understanding stakeholders’ requirements, effective collaboration within and across teams and departments, and the delivery of functional, useful solutions. (Maybe not even software – see: #NoSoftware).

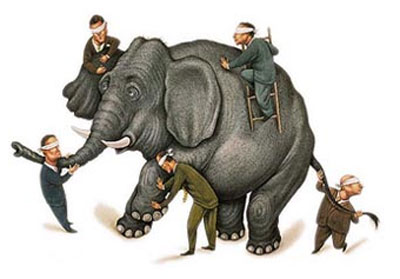

Deming’s 95/5 Principle: The Elephant in the Room

The System Sets the Stage

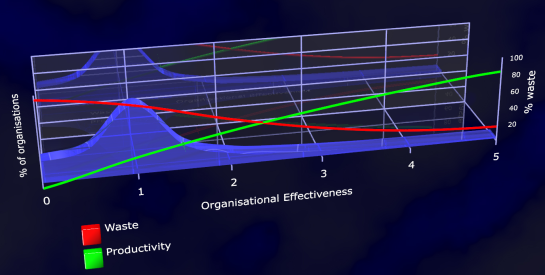

Ignoring the role of the system in productivity is like discussing climate change without mentioning the Sun. Deming’s 95/5 principle suggests that if you want to change productivity, you need to focus on improving the system, not measuring individuals, or even teams, within it.

The Limitations of Non-Systemic Metrics

Individual metrics are the 5% of the iceberg above the water; the system—the culture, processes, and tools that comprise the working environment—is the 95% below. To truly understand productivity, we need metrics that evaluate the system as a whole, not just the tip of the iceberg. And the impact of the work (needs met), not the inputs, outputs or even outcomes.

The Overlooked Contrast: Collaborative Knowledge Work vs Traditional Work

McKinsey’s article advocates for yet more Management Monstrosities, where the category error of seeing CKW – collaborative knowledge work – as indistinct from traditional models of work, persists.

The Nature of the Work

Traditional work often involves repetitive, clearly defined tasks that lend themselves to straightforward metrics and assessments. Think of manufacturing jobs, where the number of units produced per time period or per resources committed can be a direct measure of productivity. Collaborative knowledge work, prevalent in fields like software development, is fundamentally different. It involves complex problem-solving, creativity, and the generation of new ideas, often requiring deep collaboration among team members.

Metrics Fall Short

The metrics that work well for traditional jobs are ill-suited for collaborative knowledge work. In software development, such metrics can be misleading. The real value lies in innovation, problem-solving, and above all meeting stakeholders’ needs.

The Role of Team Dynamics

In traditional work settings, an individual often has a clear, isolated set of responsibilities. In contrast, collaborative knowledge work is highly interdependent. This complexity makes individual performance metrics not just inadequate but potentially damaging, as they can undermine the collaborative ethos needed for the team to succeed.

The Importance of Systemic Factors

The system in which work takes place plays a more significant role in collaborative knowledge work than in traditional roles. Factors like communication channels, decision-making processes, and company culture (shared assumptions and beliefs) can profoundly impact productivity. This aligns with Deming’s 95/5 principle, reinforcing the need for a systemic view of productivity.

Beyond Output: The Value of Intellectual Contributions

Collaborative knowledge work often results in intangible assets like intellectual property, improved ways of working, or enhanced team capabilities. These don’t lend themselves to simple metrics like ‘units produced’ but are critical for long-term success. Ignoring these factors, as traditional productivity metrics tend to do, gives an incomplete and potentially misleading picture of productivity.

A Paradigm Shift is Needed

The nature of collaborative knowledge work demands a different lens through which to evaluate productivity. A shift away from traditional metrics towards more needs-based measures is necessary to accurately capture productivity in modern work environments.

Quality and Productivity: Two Sides of the Same Coin

The Inextricable Link

Discussing productivity in isolation misses a crucial aspect of software development: quality. Quality doesn’t just co-exist with productivity; it fundamentally informs it. High-quality work means less rework, fewer bugs, and, ultimately, a quicker and more effective delivery-to-market approach.

Misguided Metrics Undermine Quality

When metrics focus solely on outputs they can inadvertently undermine quality. For example, rushing to complete tasks can lead to poor design choices, technical debt, and an increase in bugs, which will require more time to fix later on. This creates a false sense of productivity while compromising quality.

Quality as a Measure of User Needs Met

If we accept that the ultimate metric for productivity is “needs met,” then quality becomes a key component of that equation. Meeting a user’s needs doesn’t just mean delivering a feature quickly; it means delivering a feature that works reliably, is easy to use, and solves the user’s problem effectively. In other words, quality is a precondition for truly meeting needs.

A Systemic Approach to Quality and Productivity

Returning to Deming’s 95/5 principle, both quality and productivity are largely influenced by the system in which developers work. A system that prioritises quality will naturally lead to higher productivity, as fewer resources are wasted on fixing errors or making revisions. By the same token, systemic issues that hinder quality will have a deleterious effect on productivity.

Summary: A Call for Better Metrics

Metrics aren’t the problem; it’s the choice of metrics that McKinsey advocates that demands reconsideration. By focusing on “needs attended to” and “needs met,” and by acknowledging the vital role of the system, organisations can develop a more accurate, meaningful understanding of holistic productivity, and the role of software development therein.Let’s avoid the honey trap of measuring what’s easy to measure, rather than what matters.

Afterword

As with so much of McKinsey’s tripe, the headline contains a grain of truth – “Yes, you can measure software developer productivity”. But the nitty-gritty of the article is just so much toxic misinformation. Many managers will seize on it anyway. Caveat emptor!